Gesture-Sensing Radars Project

This project has developed a series of very low power radar systems intended for sensing noncontact gesture in interactive spaces. Although, at least with these systems, angular resolution is quite coarse, these radars are unaffected by lighting background and most clutter - they detect people via reflections from their skin, hence are unaffected by clothing. They can also be embedded into an installation behind a projection screen or nonconductive wall, sensing right through to the people on the other side.

Several devices have been developed, starting with the early 2.4 GHz Doppler micropatch system designed in collaboration with Matt Reynolds for the Brain Opera, and subsequently used in the Magic Carpet system.

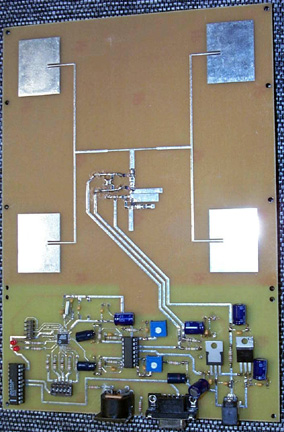

Our latest Doppler (see photo above) is based on a modified version of

the original device, but iincludes an embedded microprocessor to analyze

the Doppler signals and directly produce a set of features (gross motion,

fast motion, average direction) that are sent offboard via a serial or

MIDI connection. Unlike the previous design, no additional analog or digital

electronics are required to extract these parameters. This system has

been used in our Interactive Window installation.

In this device, two signals are taken from the microwave feed line 1/8 wave apart. These

signals are then amplified by a MAX4169 opamp and then fed directly into

the ADC inputs of a Cygnal C8051F007 microcontroller. The opamp provides

adequate gain to allow for sensing of people at a distance up to around

10 meters (depending on the local conditions). The gain can be adjusted

in order to optimize the response for the intended object to be sensed.

The raw signals are analysed, and three data streams are computed: a "general

motion" stream, which is proportional to the intensity of the reflected

radar signal (thus, this value increases as an object moves closer to

the radar, and also increases with the size and reflectivity of the object),

a second "speed" data stream (measures the frequency of the reflected

radar signal, and so is proportional to the absolute speed of the moving

object), and a third "direction" speed (generated by by comparing the

phase shifts of the radar signals from each feedline tap, and can provide

a rough measure of whether the object is generally moving towards or away

from the radar along the boresight).

The radar board then produces either a raw serial or MIDI stream of this

data. Pitch wheel control messages are sent, in order that the data can

be accessed directly by a wide range of commercial synthesizers or software

packages. MIDI or serial transmission can be selected with a bank of DIP

switches on the board. MIDI channel number is also selectable. Unfortunately,

because of the non-standard serial transmission speed used by MIDI, it

is not possible to have serial and MIDI transmission simultaneously.

Below is a bar graph that shows data received as a hand is moved back

and forth, with progressively increasing speed. notice that as the peaks

in the "motion" stream become closer (corresponding to increasing speed),

the "speed" graph exhibits larger amplitude peaks. The "direction" graph

is noisy, but nonetheless exhibits significant correlation with the motion.

We have also experimented with ranging radar systems, to determine the location of the closest person to a large interactive display, for example. This photograph shows a student (Che King Leo) testing the response of a swept-Doppler ranging system intended to determine the level of fluid in large chemical tanks, developed by sponsor colleagues at the former Saab Marine division (now Rosemount).

More information on the original Doppler system can be found in the publications posted off our Magic Carpet project website.

Joe Paradiso