Ubiquitous Sensor Portals

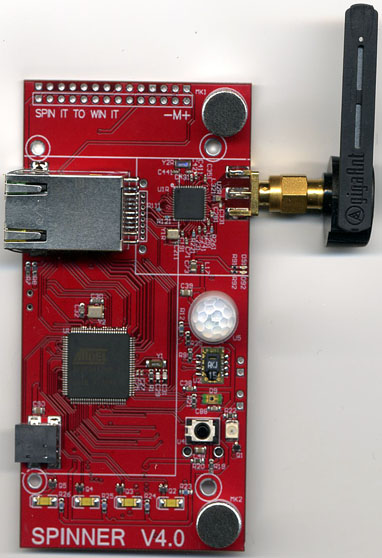

We have built a sensor network composed of 45 "Ubiquitous Sensor Portals" distributed throughout the real-world Media Lab. Each portal, mounted on pan/tilt platform, has an array of sensors, as well as audio and video capabilities. Video is aquired via a 3 MegaPixel camera above a touch screen display. The video board is driven by a TI DaVinci processor (an ARM9 running Linux paired with a C64x+ DSP core for video processing), and features a touch-screen LCD display and speaker. The sensors and an 802.15.4 radio are mounted on a daughter card (see below), which can be connected directly to a wired network for standalone operation or run as a slave to the video board (as in the above configuration). The sensor board runs off an AVR32 microcomputer (with audio DSP utilities and offchip CODEC), and features stereo microphones, PIR motion sensor, humidity/temperature sensor, light sensor, and 2 protocols of IR communication so it can detect and talk to any of the Media Lab's badges that are in the line of sight. The daughter card also supports several status LED's, and the radio communicates with and coarsely localizes many of the wearable sensors that several groups in the Media Lab are developing.

These devices plan to be used for several purposes. They were originally designed by Mat Laibowitz to support his Spinner project. They will also be used to support efforts in Cross Reality (sometimes termed "X-Reality" or "Dual Reality"), where events in the real world drive phenomena in a virtual environment that is unconstrained by time, space, or the constraints of physics (for example, the real world can be seamlessly browsed in a virtual environment). For our initial X-Reality work, we will be using SecondLife by Linden Labs. Each portal will have a related incarnation in SecondLife that will look like the construct below (this was designed by Drew Harry). SecondLife visitors can see live video from any of the portals in the real world by touching the screen of the portal with their avitar. They can also communicate with the real-world portal by touching a "Talk"button on the portal - at this point, communication from SecondLife to the real world is through text (as is standard in SecondLife at the moment), but if the request to talk is granted in the real world, audio from the sensor board will also stream from the real world to the virtual portal, enabling 2-way communication (we are working on 2-way audio/video to/from SecondLife from each physical portal). The virtual portals will also show "ghosts" at the portals' sides to reflect the presence of particular individuals standing in front of the real-world portal (as detected by IR from their badge). A trace at the bottom of the virtual portals will also reflect the "activity" level around the real-world portal (a thicker trace indicates more activity). The virtual portals also extend in time - SecondLife visitors can look at video in the past by touching on the screens that are further back in the virtual portal view.

We will be implementing a variety of privacy protocols on these devices so users remain in full control of the content captured by them and manage how it is rendered to other users by the servers.

Media:

[MOV] This video clip overviews the Portal's features and shows the most recent SecondLife view of the portals' virtual end, as created by Drew Harry. This is an implementation of Cross Reality. At the time of this video, we streamed full video and audio from the portals into SecondLife - subsequently we were also able to stream video from SecondLife back into the Portal, by running a SecondLife client on a server, scraping the SecondLife window, and streaming the media back to the portal.

[WMV] Operations with trial software running on the portal. A movie runs when the Portal is idle - when touched, a Media Lab map is displayed, along with icons representing other portals (green if they are active, red if they are disabled). The map then zooms to the area of touch, now also showing motion and sound level data for each portal in view. Touching again on a portal icon will stream video from that portal to the user's portal. The "Virtual" button streams video from our region of SecondLife to the portal, again, by streaming media from the SecondLife window of a server running a SecondLife client (as shown in the video, this stream wasn't yet entirely optimized for the CODEC running on the portal, but it is easily improved). This is a very simple application to show the portal system in operation - many dynamic media applications are now being developed for them, and will be posted shortly.

Publications:

J. Lifton, M. Laibowitz, D. Harry, N.-W. Gong, M Mittal, and J. A. Paradiso "Metaphor and Manifestation - Cross Reality with Ubiquitous Sensor/Actuator Networks", IEEE Pervasive Computing, July-September 2009 (vol. 8 no. 3) pp. 24-33. [ download ]

M.Laibowitz, N.-W. Gong, and J. A. Paradiso, "Wearable Sensing for Dynamic Management of Dense Ubiquitous Media" Proc. 6th International Workshop on Body Sensor Networks (BSN09), IEEE CS Press, 2009, pp 3-8.

[ download ]

Return to the Responsive Environments Group Projects Page

Project Team:

Mat Laibowitz

Lead hardware/software & system designer

Drew Harry

X-Reality Architect & Software

Bo Morgan

Server Software

Nan-Wei Gong

UI Software & Privacy Protocol

Michael Lapinski

Networking & Installation

Alex Reben

Visualization Software & Installation

Matt Aldrich

Software Support & Installation

Mark Feldmeier

Hardware Support

Faculty Leader