About

Motivation

Acoustic musical instruments have traditionally featured static mappings from input gesture to output sound, their input affordances being tied to the physics of their sound-production mechanism. More recently, the advent of digital sound synthesizers and electronic music controllers has abolished the tight coupling between input gesture and resultant sound, making an exponentially large range of input-to-output mappings possible, as well as an infinite set of possible timbres. This revolutionary change in the way sound can be produced and controlled brings with it the burden of design: Compelling and natural mappings from gesture to sound now must be created in order to create a playable electronic music instrument.

FlexiGesture is a device that allows flexible assignment of input gesture to output sound, so acting as a laboratory to help further understanding about the connection from gesture to sound.

People

The FlexiGesture project was started and is currently headed by the

Responsive Environments Group at

the MIT Media Lab. The project

currently includes:

- Faculty: Joe Paradiso

- Graduate Students: David Merrill

System Overview

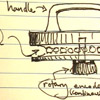

FlexiGesture is an embodied multi-degree-of-freedom gestural input device. It was built to support six-degree-of-freedom inertial sensing, five isometric buttons, two digital buttons, two-axis bend sensing, isometric rotation sensing, and isotonic electric field sensing of position. Software handles the incoming serial data, and implements a trainable interface by which a user can explore the sounds possible with the device, associate a custom inertial gesture with a sound for later playback, make custom input degree-of-freedom (DOF) to effect modulation mappings, and play with the resulting configuration.

History

Fall 2003 - present.

|